Earlier this week, Facebook announced plans to expand its artificial intelligence-based suicide prevention campaign. The company shared how the program has been working so far, essentially using "proactive detection" technology to scan posts for signs that the user might be suicidal and flagging them to Facebook moderators for next steps, which range from offering links to online resources all the way up to contacting first responders on the user's behalf.

The goal is to shorten the amount of time between a concerning post and Facebook being brought into the conversation. Previously, Facebook wouldn't get involved unless a friend manually flagged someone's post as seeming suicidal.

"When someone is expressing thoughts of suicide, it’s important to get them help as quickly as possible," wrote Facebook Vice President of Product Management Guy Rosen, on the company's blog.

Experts in suicide prevention are pretty excited about this plan, though some have minor reservations. Facebook's plans are "important and groundbreaking," Dr. Christine Moutier, chief medical officer at Americans for Suicide Prevention, writes in an e-mail, lauding this type of "creative and innovative solution."

"With the help of large tech companies like Facebook, we can reduce the suicide rate in the United States, furthering AFSP's Project 2025 goal: to reduce the rate 20% in the US by 2025," she writes.

AI has "demonstrated itself as an effective tool for identifying people who may be in crisis," writes National Suicide Prevention Lifeline Director John Draper, noting there are still some challenges when it comes to machine learning technology understanding the difference between someone joking with their friends and someone who may actually be on the verge of self-harm.

"We’ve been advising Facebook on how to provide assistance for persons in crisis through creating a more supportive environment on their social platform so that people who need help can get it faster, which ultimately, can save lives," he writes, adding that "notifying police is an absolute last resort."

Joining the chorus of supporters of Facebook's plan is Dr. Victor Schwartz of The Jed Foundation, who has two caveats related to transparency and training.

"We applaud Facebook's efforts to enhance their ability to identify and respond to users who may be at increased risk for self-harm," Schwartz writes in an e-mail. "We hope that Facebook users would be made aware of this new protocol and would be alerted to the impending intervention, and that first responders would be properly trained to respond to those in possible crisis."

To the latter point, first responders should be properly trained to respond to suicidal individuals and others experiencing a mental health crisis, but are often not. For example, earlier this month, a suicidal woman in Cobb County, Georgia, was shot and killed by police after she grabbed a gun. In August, a similar scene unfolded in Florida.

There is no doubt that deescalating this type of situation, especially when the person involved is armed, is difficult. That's why ensuring proper training is so important.

Skepticism remains high in the online community, with some worrying that this life-saving tech could be repurposed for more nefarious pursuits in the future. Though Facebook has provided a pretty decent high-level overview of this new tool — which users apparently won't be able to opt out of — the company has been extremely light on details of how it actually works.

Responding to critics worried about how Facebook may use this technology in the future, the company's chief security officer, Alex Stamos, stressed that "it's important to set good norms today around weighing data use versus utility and be thoughtful about bias creeping in."

It's perfectly rational to have concerns over the use and misuse of AI, but the truth is that tech like this is going to play a big role in coming years.

Between this and concerns about ensuring that first responders are properly trained, Facebook's new approach to suicide prevention feels like something humanity can feel cautiously optimistic about — but only time will truly tell.

Ladder leads out of darkness.Photo credit

Ladder leads out of darkness.Photo credit  Woman's reflection in shadow.Photo credit

Woman's reflection in shadow.Photo credit  Young woman frazzled.Photo credit

Young woman frazzled.Photo credit

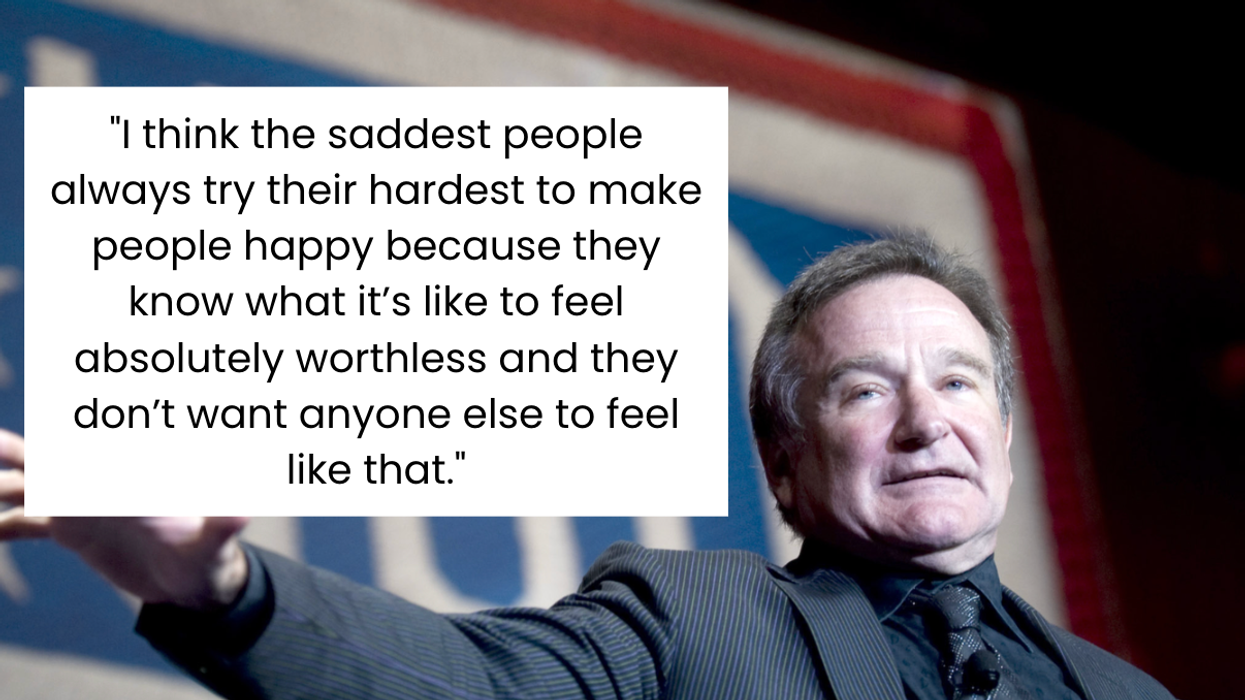

Robin Williams performs for military men and women as part of a United Service Organization (USO) show on board Camp Phoenix in December 2007

Robin Williams performs for military men and women as part of a United Service Organization (USO) show on board Camp Phoenix in December 2007 Gif of Robin Williams via

Gif of Robin Williams via

Will your current friends still be with you after seven years?

Professor shares how many years a friendship must last before it'll become lifelong

Think of your best friend. How long have you known them? Growing up, children make friends and say they’ll be best friends forever. That’s where “BFF” came from, for crying out loud. But is the concept of the lifelong friend real? If so, how many years of friendship will have to bloom before a friendship goes the distance? Well, a Dutch study may have the answer to that last question.

Sociologist Gerald Mollenhorst and his team in the Netherlands did extensive research on friendships and made some interesting findings in his surveys and studies. Mollenhorst found that over half of your friendships will “shed” within seven years. However, the relationships that go past the seven-year mark tend to last. This led to the prevailing theory that most friendships lasting more than seven years would endure throughout a person’s lifetime.

In Mollenhorst’s findings, lifelong friendships seem to come down to one thing: reciprocal effort. The primary reason so many friendships form and fade within seven-year cycles has much to do with a person’s ages and life stages. A lot of people lose touch with elementary and high school friends because so many leave home to attend college. Work friends change when someone gets promoted or finds a better job in a different state. Some friends get married and have children, reducing one-on-one time together, and thus a friendship fades. It’s easy to lose friends, but naturally harder to keep them when you’re no longer in proximity.

Some people on Reddit even wonder if lifelong friendships are actually real or just a romanticized thought nowadays. However, older commenters showed that lifelong friendship is still possible:

“I met my friend on the first day of kindergarten. Maybe not the very first day, but within the first week. We were texting each other stupid memes just yesterday. This year we’ll both celebrate our 58th birthdays.”

“My oldest friend and I met when she was just 5 and I was 9. Next-door neighbors. We're now both over 60 and still talk weekly and visit at least twice a year.”

“I’m 55. I’ve just spent a weekend with friends I met 24 and 32 years ago respectively. I’m also still in touch with my penpal in the States. I was 15 when we started writing to each other.”

“My friends (3 of them) go back to my college days in my 20’s that I still talk to a minimum of once a week. I'm in my early 60s now.”

“We ebb and flow. Sometimes many years will pass as we go through different things and phases. Nobody gets buttsore if we aren’t in touch all the time. In our 50s we don’t try and argue or be petty like we did before. But I love them. I don’t need a weekly lunch to know that. I could make a call right now if I needed something. Same with them.”

Maintaining a friendship for life is never guaranteed, but there are ways, psychotherapists say, that can make a friendship last. It’s not easy, but for a friendship to last, both participants need to make room for patience and place greater weight on their similarities than on the differences that may develop over time. Along with that, it’s helpful to be tolerant of large distances and gaps of time between visits, too. It’s not easy, and it requires both people involved to be equally invested to keep the friendship alive and from becoming stagnant.

As tough as it sounds, it is still possible. You may be a fortunate person who can name several friends you’ve kept for over seven years or over seventy years. But if you’re not, every new friendship you make has the same chance and potential of being lifelong.