A few decades ago, reading people’s minds seemed like pure science fiction. But with the rise of “neural decoding,” neuroscientists can now decode brain activity by monitoring brainwaves. While previous studies have focused on reconstructing images and words, researchers have now taken a groundbreaking step: reconstructing “music” from the mind. In August 2023, scientists at the University of California, Berkeley, successfully reconstructed a 1979 Pink Floyd song by decoding the electrical signals in listeners’ brainwaves. The study was published in the journal PLOS Biology.

To carry out the study, lead researchers Robert Knight and Ludovic Bellier analyzed the electrical activity of 29 epileptic patients undergoing brain surgery at Albany Medical Center in New York. As the patients were being operated on, Pink Floyd’s single “Another Brick in the Wall, Part 1” played in the surgery room. The skulls of these patients were taped with several electrodes that recorded the electrical activity going on in their brains as they listened to the song. Later on, Bellier was able to reconstruct the song from this electrical activity using artificial intelligence models. The resulting piece of music was both eerie and intriguing. “It sounds a bit like they’re speaking underwater, but it’s our first shot at this,” Knight told The Guardian.

This experiment provided several insights into the connection between music, muscles, and mind. According to the university’s press release, this reconstruction showed the feasibility of recording and translating brain waves to capture the musical elements of speech, as well as the syllables. In humans, these musical elements, called prosody — rhythm, stress, accent, and intonation — carry meaning that the words alone do not convey. Because these "intracranial-electroencephalography (iEEG) recordings" could be made only from the surface of the brain, this research was as close as one could get to the auditory centers.

This could prove to be a wonderful thing for people who have difficulty in speech, like those suffering from a stroke or muscle paralysis. “It’s a wonderful result,” said Knight, per the press release. “It gives you the ability to decode not only the linguistic content but some of the prosodic content of speech, some of the effect. I think that’s what we’ve begun to crack the code on.”

Speaking about why they chose only music and not voice for their research, Knight told Fortune that it is because “music is universal.” He added, “It preceded language development, I think, and is cross-cultural. If I go to other countries, I don’t know what they’re saying to me in their language, but I can appreciate their music.” More importantly, he said, “Music allows us to add semantics, extraction, prosody, emotion, and rhythm to language.”

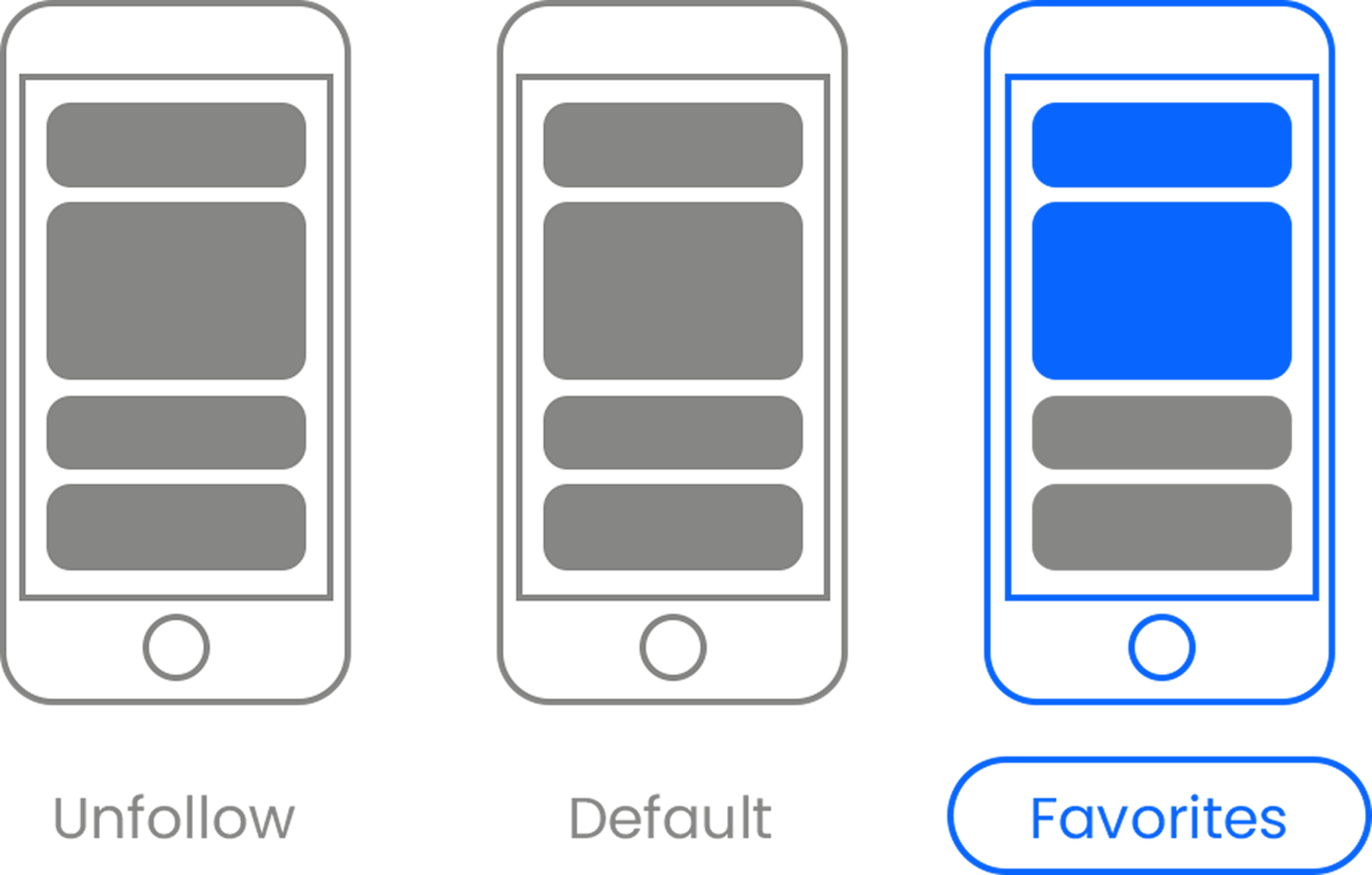

“Right now, the technology is more like a keyboard for the mind,” Bellier told Fortune. “You can’t read your thoughts from a keyboard. You need to push the buttons. And it makes kind of a robotic voice; for sure there’s less of what I call expressive freedom.”

The study not only unveiled a way to synthesize speech but also pinpointed new brain areas involved in detecting rhythm, such as a thrumming guitar. Additionally, the researchers also confirmed that the right side of the brain is more attuned to music than the left side. “Language is more left brain. Music is more distributed, with a bias toward right,” said Knight, per the press release. “It wasn’t clear it would be the same with musical stimuli,” Bellier added. “So here, we confirm that that’s not just a speech-specific thing, but that it’s more fundamental to the auditory system and the way it processes both speech and music."

This article originally appeared 2 months ago.

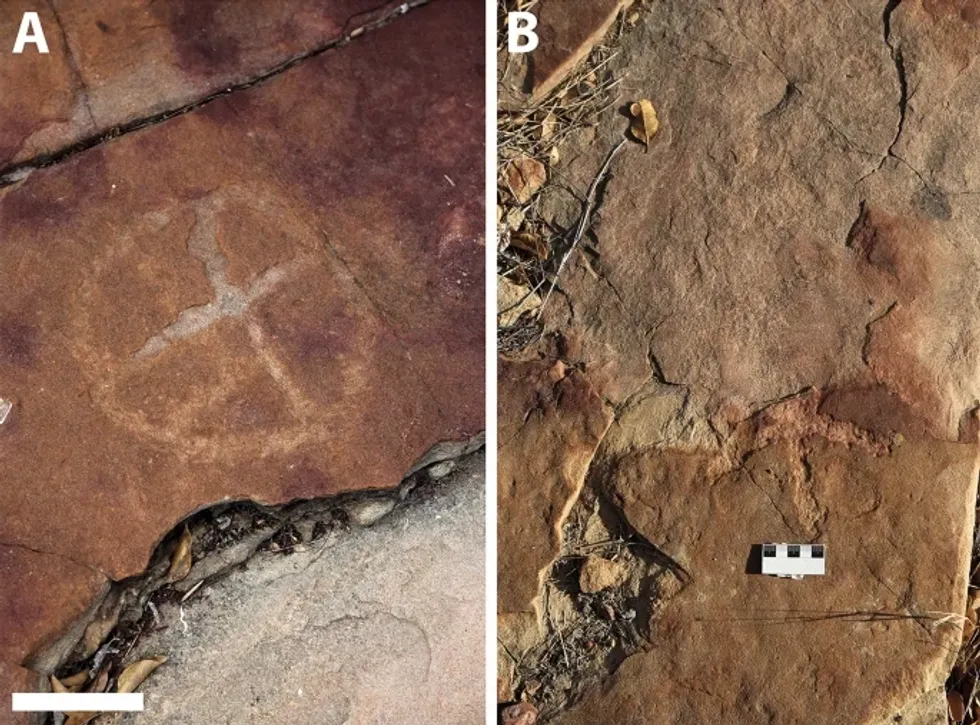

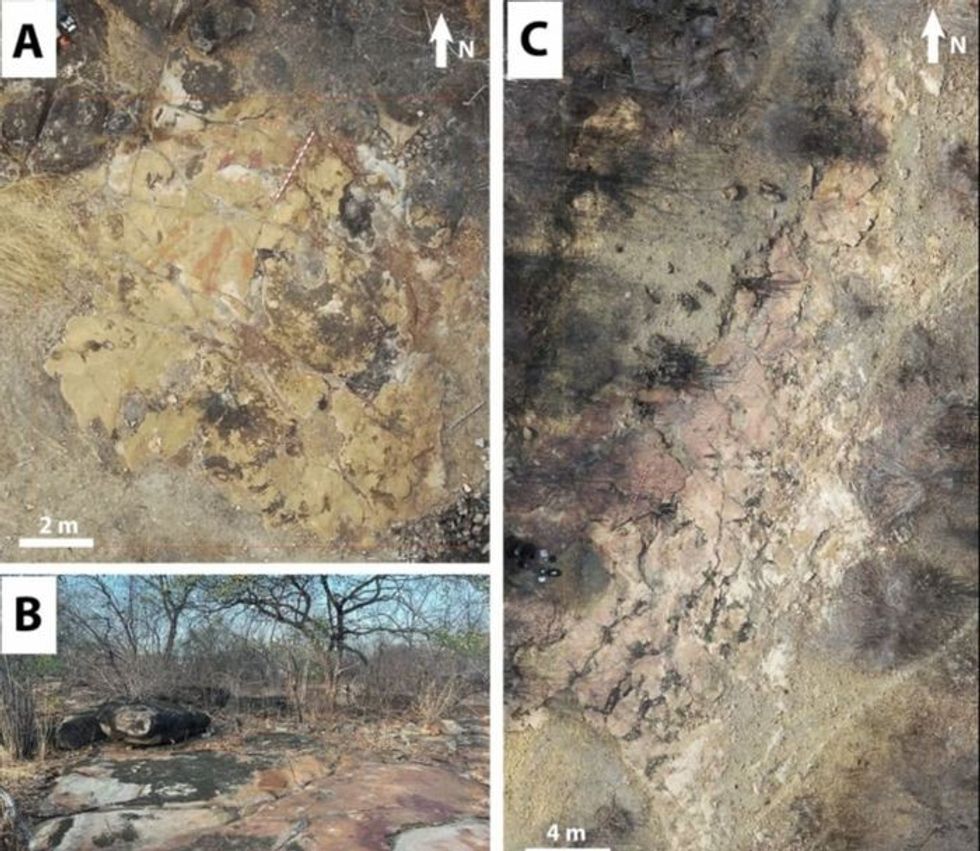

Rock deterioration has damaged some of the inscriptions, but they remain visible. Renan Rodrigues Chandu and Pedro Arcanjo José Feitosa, and the Casa Grande boys

Rock deterioration has damaged some of the inscriptions, but they remain visible. Renan Rodrigues Chandu and Pedro Arcanjo José Feitosa, and the Casa Grande boys The Serrote do Letreiro site continues to provide rich insights into ancient life.

The Serrote do Letreiro site continues to provide rich insights into ancient life.

Music isn't just good for social bonding.Photo credit: Canva

Music isn't just good for social bonding.Photo credit: Canva Our genes may influence our love of music more than we realize.Photo credit: Canva

Our genes may influence our love of music more than we realize.Photo credit: Canva

Great White Sharks GIF by Shark Week

Great White Sharks GIF by Shark Week

Blue Ghost Mission 1 - Sunset Panorama GlowPhoto credit:

Blue Ghost Mission 1 - Sunset Panorama GlowPhoto credit: